Use the guidelines below to determine how to devote pod resources for shared locators and how to configure a geocode service in ArcGIS Enterprise Manager.

Optimize geocode services for ArcGIS Enterprise on Kubernetes

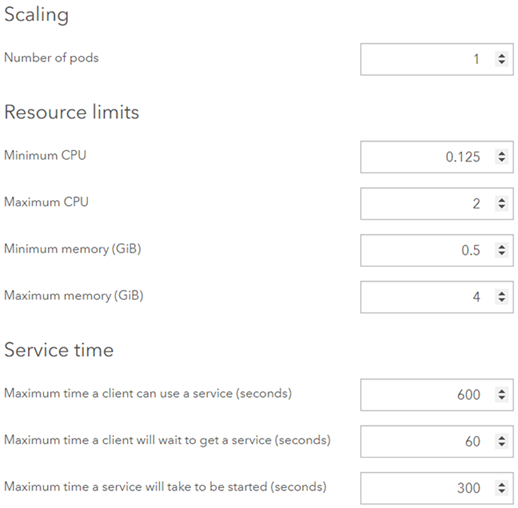

After sharing your locator from ArcGIS Pro, you must allocate the appropriate resources to the pod that is created. Depending on your deployment, you may need to allocate more resources to the pod so that the geocode service can function optimally. A geocode service deployment has the following specifications when first published from ArcGIS Pro:

Use the following values for Maximum memory (GiB) for the pod when Minimum number of instances per pod is set to 1 and Maximum number of instances per pod is set to 1:

| Locator size | Recommended maximum memory value |

|---|---|

| Less than 1 GB | 1Gi per instance. |

Between 1 GB - 20 GB | 4Gi per instance. This is the default value when sharing from ArcGIS Pro. |

Over 20 GB | 5Gi per instance. |

Note:

The Minimum number of instances per pod and Maximum number of instances per pod values were set in the Pooling section of the Configuration tab of the Share Locator pane in ArcGIS Pro when the locator was shared to the portal. You can also find this information or edit the number of instances per pod by using an editService REST request or by using the ArcGIS Enterprise Administrator API (browse to /admin for the ArcGIS Enterprise on Kubernetes portal, go to Services, click the service, and edit minInstancesPerNode and maxInstancesPerNode).

Depending on the locator size, updating the Maximum memory value on the pod from ArcGIS Enterprise Manager may be required. These recommendations are based on one instance per pod. If the pod is scaled up to support a minimum and maximum of eight instances per pod, you must adjust the Maximum memory value accordingly. To know exactly how many instances are running on each pod, set Minimum number of instances per pod and Maximum number of instances per pod to the same number.

Note:

Minimum CPU and Minimum memory equates to kubernetes requests. This is the guaranteed amount of Minimum CPU and Minimum memory used by the pods. Maximum CPU and Maximum memory equate to Kubernetes limits. This is the amount of Maximum CPU and Maximum memory that a pod is allowed to use. What is not used, is free to be used by the Kubernetes cluster until it is needed.

For example, if you have a locator pod that is less than 1 GB in size and you set Minimum number of instances per pod to 8 and Maximum number of instances per pod to 8, set the Maximum memory value to 8, since you want to allow the locator 1Gi per instance and there are eight instances on that one pod.

Another example is if you have a locator that is more than 20 GB in size with a pod spun up with Minimum number of instances per pod set to 4 and Maximum number of instances per pod set to 4, set the Maximum memory value to 20, since you want to allow the locator 5Gi per instance and there are four instances that are spun up (5Gi x 4 instances = 20Gi).

Each time you share a locator, a pod is spun up. Adjust the Maximum memory value in ArcGIS Enterprise Manager each time to ensure the best performance.

You can set the Number of pods value to a number greater than 1 if appropriate. One pod is spun up by default. Each pod contains a certain number of instances that is defined by the Minimum number of instances per pod and Maximum number of instances per pod values. You can adjust the Maximum memory value per pod. For example, If Maximum memory is set to 4 and Number of pods is set to 2, two pods are spun up, each with 4Gi of allocated memory. You can adjust the Number of pods value in ArcGIS Enterprise Manager.

It is important to find the best balance of instances per pod and number of pods to spin up. Having more instances running on each pod typically provides better performance, while having fewer instances but more pods running typically provides better availability. The administrator of your ArcGIS Enterprise organization will determine the best deployment specifications for the organization.

The Maximum CPU value should equal the Minimum number of instances per pod and Maximum number of instances per pod values. Generally, one CPU per instance is necessary on the pod. By default, two CPUs are allocated when the service is first shared from ArcGIS Pro. If your Instances per pod value is greater than 2, ensure that the Maximum CPU value for the deployment is equal to the number of Instances per pod value.

Allocate resources for the geocode service

To configure the number of pods, maximum memory, and maximum CPU for the geocode service in ArcGIS Enterprise Manager, complete the following steps:

- Open ArcGIS Enterprise Manager for the ArcGIS Enterprise on Kubernetes organization.

- Sign in as an administrator.

- Click the Services banner.

- Click the geocode service to configure.

- Click the Settings ribbon.

- Change the Number of pods, Maximum memory (GiB), and Maximum CPU values for your organization based on the recommendations in the previous section.

- Click Save.

The service spins up the pods and allocates memory according to your specifications.