Once you've published GIS services, it is best practice to monitor them, for example by viewing service usage statistics, to understand usage patterns and to get a sense of how to adjust resources accordingly, based on the traffic you observe. As traffic patterns and user demands on your services change, you can respond accordingly in a few ways. To meet demand and performance expectations, consider the following ways to optimize service resources:

- Adjust the service mode to maximize dedicated resources for a service.

- Manually scale services by specifying the number of pods to be allocated for a service.

- Enable auto scaling by specifying a threshold by which pods will be automatically allocated for a service.

When determining which option to choose, consider these scenarios.

Scaling types

You can scale services manually or, using the auto scaling feature, automatically. In both cases, the method by which the services are scaled is characterized as horizontal (in or out) scaling or vertical (up and down) scaling. Services can be scaled regardless of the mode they're configured for, whether it is shared or dedicated.

- Horizontal scaling—Adds more pods to a service deployment. For example, scaling from one pod to many. Supported for manual and auto scaling.

- Vertical scaling—Adds CPU or memory to the current set of pods in a deployment. Supported for manual scaling.

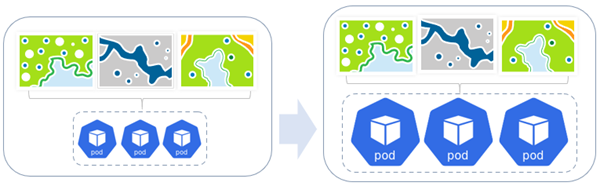

When you increase the number of pods available for a service, the Kubernetes cluster produces additional replicas of the service deployment's existing pods, including their service configuration and service instances within the pods.

Scaling also increases the availability and total throughput of instances for the service, as well as the memory and CPU consumption of the service. Because you're scaling your Kubernetes infrastructure, this option is fault tolerant; pods that fail are automatically restored without affecting other pods. It also allows for independent and isolated scaling without affecting other services or other components within your system.

Note:

The Kubernetes cluster on which your organization is deployed has a finite number of computer nodes. By scaling many GIS services manually or automatically, your organization may reach the limit of computer resources allotted to ArcGIS Enterprise on Kubernetes. If this occurs, work with your IT administrator to add more nodes to the Kubernetes cluster. Consider using cluster autoscaler as a solution for this in your environment.

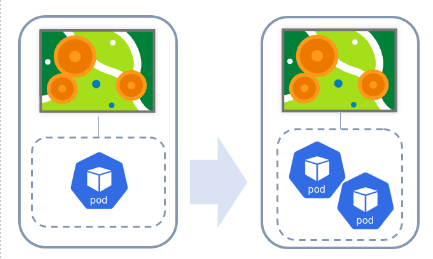

Horizontal scaling

Horizontal scaling adds more pods to a service deployment. For example, scaling from one pod to many. It is supported for both manual and auto scaling.

The example above illustrates horizontal scaling for a geoprocessing service that is running in dedicated mode. As additional pods are needed, the service deployment is adjusted accordingly, either manually or automatically. In this example, an additional pod replica is added to the service deployment.

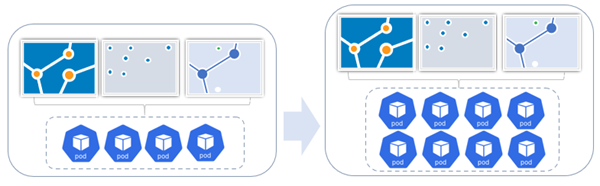

In the following example, a shared feature service deployment is scaled horizontally by adding pod replicas to the deployment. In the initial set of shared resources, there are three feature services supported by four pods. As steady demand has increased, the pods in the service deployment have increased to eight.

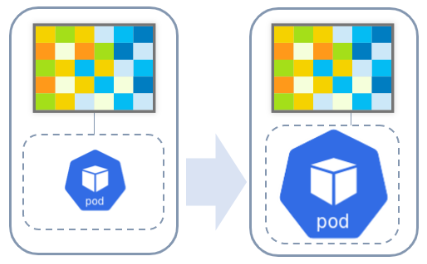

Vertical scaling

Vertical scaling adds CPU or memory to the current set of pods in a deployment and is supported for manual scaling.

The example above illustrates vertical scaling for an image service running in dedicated mode. As additional capacity is required, the dedicated pod or pods can be adjusted accordingly, either manually or automatically. In this case, increased resources—CPU, memory, or both—are allocated to the pod.

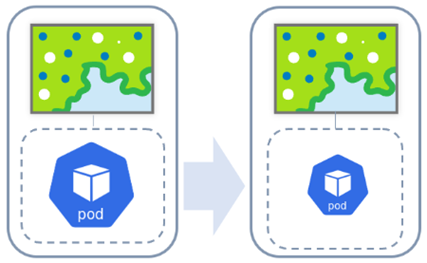

In another example, when a map service in dedicated mode no longer requires additional resources, its resources are scaled down vertically.

In the following example, vertical scaling is used for a shared map service deployment. As CPU or memory is required, the dedicated pods can be adjusted accordingly. In this case, increased CPU or memory resources are allocated to the three participating pods.

Scenarios

To meet performance demands while conserving resources used by your organization, it's important to understand when and how to scale the resources available to your services. The following examples are hypothetical scenarios that organization administrators need to consider when scaling their resources:

- A web map in a public organization is suddenly receiving high traffic volume and users are experiencing performance delays. The organization administrator views the system logs and determines that a map service used by the web map is overburdened. First, they may change the service mode from using shared resources to using dedicated resources. Next, they can increase pod replicas for that service's deployment. By providing dedicated resources for the map service, the administrator ensures that the high traffic for the service is handled without performance issues.

- A surveying company has accumulated hundreds of feature services in their organization. All of them are set to shared mode, so there is one service deployment supporting them. No service receives high traffic, but the overall use of the organization's GIS content is burdening the service deployment. The organization administrator chooses to manually scale the service by increasing the number of pod replicas in the service deployment. With more shared instances running, traffic to the organization's many feature services is adequately handled.

- During a content migration project, a city government's GIS organization is republishing many web maps and web layers to their organization. Due to time constraints, they want to complete this quickly. Because publishing the services underlying the web maps and web layers is performed by the PublishingTools utility service, the machine resources available to that utility service determines how quickly publishing can occur. The organization administrator increases the pod replicas in the PublishingTools service deployment temporarily to improve publishing efficiency during the project. After the project is complete, they decrease the pod replicas in the service deployment to conserve machine resources.

Reviewing the first two scenarios above, where there is either an overburdened map service or the simultaneous use of a large number of feature services that results in an overburdened system, manual scaling of the dedicated map service or the shared feature service deployments may not be the most feasible method. Manual scaling requires constant monitoring of the load and an understanding of the load patterns to determine the number of pods to scale to. If a load is intermittent and unpredictable, then manually adding a large number of pods will result in wasted resources when there is not much load on the system. Conversely, you want the system to be able to scale out as more pods are required for heavier loads.

This may be an optimal case for an administrator to leverage auto scaling. Auto scaling allows you to maintain a minimum number of pods and to set a maximum number of pods that the system can scale out to based on CPU thresholds you have set. This method provides automatic detection for when to increase or decrease pods in response to changes in load. This removes the need to constantly monitor a system and allows the system to conserve resources.

Adjust the service mode

If a map or feature service that's using shared resources is receiving constant traffic, you can adjust the service mode to use dedicated resources. This opens a new service resource pool that is dedicated to that service.

Manual scaling

When demand for services is predictable or steady, you can manually scale a service deployment by specifying the number of pods that are allocated to a service or by increasing the resources (CPU/memory) available to the service. This option is useful when the number of dedicated resources serving the service is inadequate and users are experiencing performance delays.

If after monitoring the service you see that demand has increased or if performance issues are reported for the service, you can increase the number of pods as needed. If and when demand begins to subside, you can decrease the number of pods.

Auto scaling

When service demand is unpredictable or intermittent, you can enable auto scaling to maximize efficiency across system resources.

When you enable auto scaling on a service, the pod replicas in a service deployment will automatically increase or decrease to meet demand. The service deployment will use your predefined criteria such as CPU or memory to calculate the demand for more or fewer pods and will adjust accordingly.

Role of service instances

Service deployments that are not hosted—like map, image, geoprocessing, or geocoding—contain service instances that run within each pod of a service's deployment. These represent the capacity of each pod and are the underlying processes that are used to process requests to any of these services. Adjusting and scaling of these processes is supported; however, it is recommended that you instead adjust and scale at the pod level to leverage the benefits of Kubernetes (fault tolerance, isolation, resiliency, autoscaling, and so on).